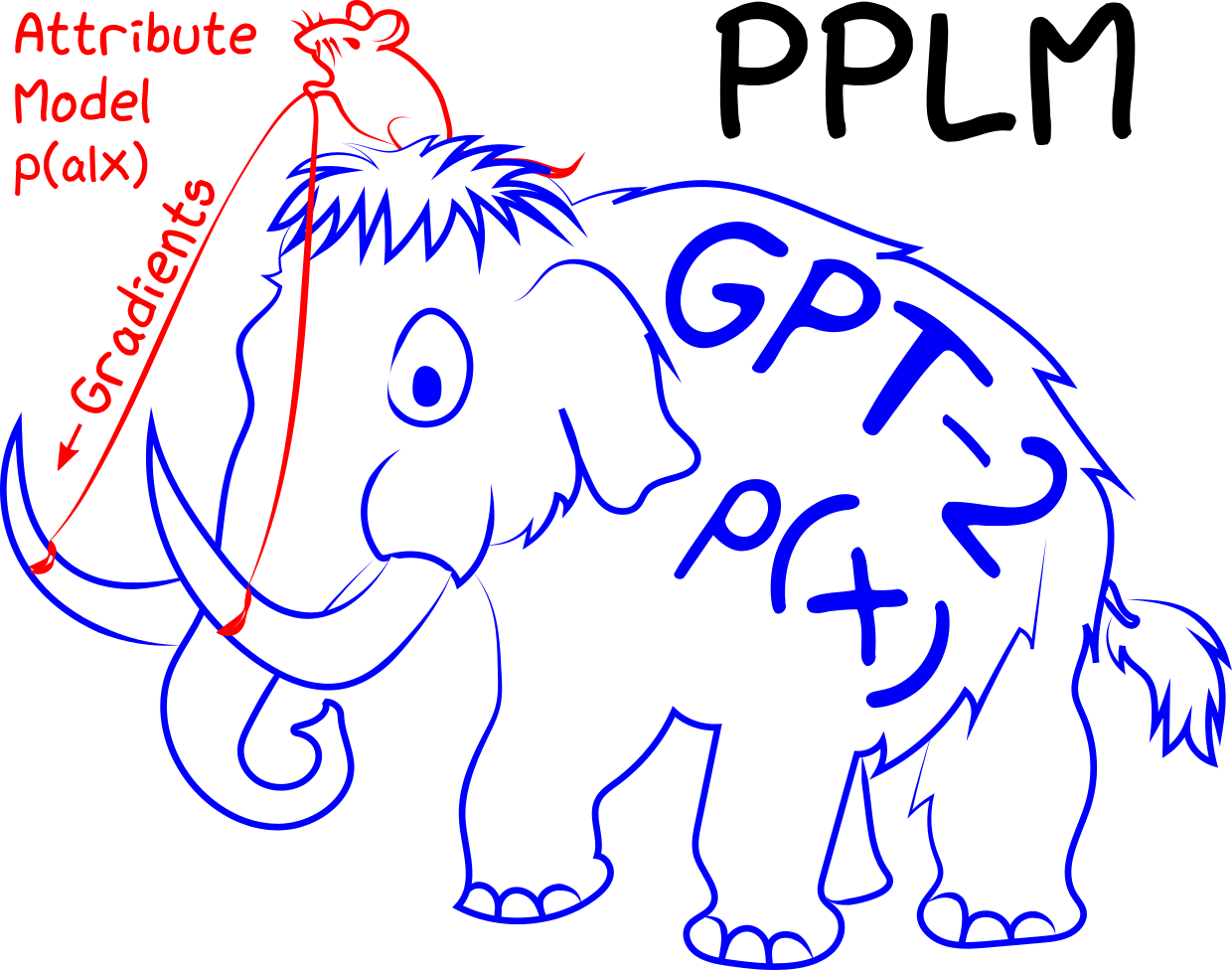

Meet Wooly the mammoth! We’ve been experimenting ways to “steer” it with a mouse. Is it possible?

Read more about what we learned here:

- Blog: Controlling Text Generation with Plug and Play Language Models

- Paper: https://arxiv.org/abs/1912.02164

- Online demo: https://transformer.huggingface.co/model/pplm

- Code: https://github.com/uber-research/PPLM

- Colab: Colab notebook (no local setup needed!)

(Still can’t believe we did all those.)

Why the mammoth:

Large generative language models like GPT-2 are enormous (over a billion parameters), difficult, expensive and require lots of data to train. Even if someone dealt with the training for you, after training, they behave like giant wooly mammoths—wise but unguided, lumbering wherever they please. It can generate coherent sentences but one can hardly control where the sentence goes, in terms of topic, sentiment, and other kinds of attributes.

Why the mouse:

Now imagine if we want to control such a generation, with an online and a no training mechanism, that is, during the generation, with no modification of model weights. How big of a controller do you think is needed?

Turns out, we can use tiny attribute models (~100,000 times smaller, roughly the ratio of mouse to mammoth) to steer giant generative models, with the proposed PPLM method. The attribute models in our canonical setting are either 1) a user-specified bag of words—0 parameter, or 2) a single linear layer—1K parameters per class. When you sample/generate language, a forward and backward pass will have gradients from the attribute model push GPT-2’s hidden activations and thus guide the generation.

Why gradients:

Well. Because.

Enjoy! (Or not. Then just enjoy life.)